Introduction

Automated LLM text summarization is the process of reducing large blocks of information into shorter versions using programs or AI. One way or another, this method plays a significant role in fighting fake news and information overload while making it possible for people to understand data and documents better.

However, abstractive LLM text summarization would remain difficult for AI, until recently. Consider the current methods for word-by-word compression: fast word embedding, recursive neural networks (RNN), transformers, and similar innovations have significantly enhanced generative AI ability to read texts well enough to synthesize short-form summaries.

Transformers are particularly capable at reducing down a lengthy document into a summarized response, which is why they excel at the LLM text summarization. Among NLP tasks today, LLM text summarization is one of the hardest because it is not only an ML task but demands other skills such as reading a long passage and generating a piece that is coherent and representative of each paragraph.

Types Of Automatic Text Summarization

Abstractive LLM Text Summarization

Summarization of this kind extracts the most important information from the main text and then produces a new summary based on the information that was extracted from the source text.

For example, a summary on abstractive LLM text summarization on intermittent fasting would look like this –

“There is some evidence that the percentage of time spent not eating might be beneficial for those trying to improve their metabolic health. Intermittent fasting entails alternating periods of not eating with times allowed for eating, such as the 16/8 method or alternate-day fasting. Some research suggests that this approach may increase insulin sensitivity, decrease inflammation, and support weight loss. But there may be other long-term benefits or risks that have never been studied. And the long-term effects on subgroups, like individuals with diabetes or pregnant or breastfeeding women haven’t been assessed.”

This kind of summarization describes the main points and essential details from a text written in a different language. Then, with the help of this information, the model writes whole sentences of a summary.

Continuing the above example, we will get the following summary –

“Some research suggests that going without food for certain periods could regulate the body’s energy balance and improve overall metabolic health. Intermittent fasting involves cycles of eating and not eating. Common patterns include the 16/8 method, which involves fasting for 16 hours each day, or alternate-day fasting. Some studies suggest that intermittent fasting may improve insulin sensitivity and reduce inflammation while helping to promote weight loss. However, more research is necessary to determine the long-term effects of the regimen on health, as well as any risks. This is particularly important for specific populations, such as people with diabetes and expectant mothers. “

Extractive LLM Text Summarization

Extractive LLM text summarization is formed by important sentences or phrases that are extracted from given dialogues. The important sentences are identified with the help of ranking algorithms which assign scores to each sentence so that it generates only relevant sentences.

Extractive LLM text summarization for the example of intermittent fasting would look something like this –

“Investigations are underway into intermittent fasting as a health promotion strategy. The primary aim of the research is to identify long-term effects and safety on different groups at risk, with possible negative impacts.”

Step-By-Step State Of The Art Technique

Two summarization techniques, BERT and GPT, can be used as transformer models for long-range dependencies and semantic relationships.

The second one is used for retraining and fine-tuning specific tasks on large corpora to achieve state-of-the-art performance.

Step1: Import all the libraries in the following figure

from datasets import load_dataset

from transformers import AutoModelForSeq2SeqLM

from transformers import AutoTokenizer

from transformers import GenerationConfigStep2: Preparing datasets or corpus

You can use any summarization dataset from many platforms, but in our case, we load knkarthick/dialogsum dataset from huggingface.com for dialogue summarization.

huggingface_dataset_name = "knkarthick/dialogsum"

dataset = load_dataset(huggingface_dataset_name)

datasetHere are a few examples from our datasets

for i, index in enumerate([0,1]):

print('Example ', i + 1)

print('Input dialogue:')

print(dataset['train'][index]['dialogue'])

print('Human Summary:')

print(dataset['train'][index]['summary'])

print(“*************”)Step3: Load and select the model

We have some popular pretrained models that can be fine-tuned for summarization, such as GPT2, PEGASUS, T5, mT5, and BART. We are using FlanT5 and load from hugging face by given model ID for our use case because it is a universal transformer architecture that formulates all the tasks.

model_name='google/flan-t5-base'

model = AutoModelForSeq2SeqLM.from_pretrained(model_name)

tokenizer = AutoTokenizer.from_pretrained(model_name, use_fast=True)Step4: Generate output from our model

LLM text summarization: A dialogue without any prompt engineering.

for i, index in enumerate([0, 1]):

dialogue = dataset['train'][index]['dialogue']

summary = dataset['train'][index]['summary']

inputs = tokenizer(dialogue, return_tensors='pt')

output = tokenizer.decode(

model.generate(

inputs["input_ids"],

max_new_tokens=50,

)[0],

skip_special_tokens=True

)

print('Example ', i + 1)

print(f'input prompt:\n{dialogue}')

print(f'human summary:\n{summary}')

print(f'model generated text using without prompt engineering:\n{output}\n')Zero-shot Inference with an instruction prompt

for i, index in enumerate([0]):

dialogue = dataset['train'][index]['dialogue']

summary = dataset['train'][index]['summary']

prompt = f"""

Summarize the following conversation.

{dialogue}

Summary:

"""

inputs = tokenizer(prompt, return_tensors='pt')

output = tokenizer.decode(

model.generate(

inputs["input_ids"],

max_new_tokens=50,

)[0],

skip_special_tokens=True

)

print('Example ', i + 1)

print(f'input prompt:\n{dialogue}')

print(f'human summary:\n{summary}')

print(f'model generated output with zero shot prompt:\n{output}\n')Zero-shot Inference with a prompt template

for i, index in enumerate([0, 1]):

dialogue = dataset['train'][index]['dialogue']

summary = dataset['train'][index]['summary']

prompt = f"""

Dialogue:

{dialogue}

What was going on?

"""

inputs = tokenizer(prompt, return_tensors='pt')

output = tokenizer.decode(

model.generate(

inputs["input_ids"],

max_new_tokens=50,

)[0],

skip_special_tokens=True

)

print('Example ', i + 1)

print(f'input prompt:\n{dialogue}')

print(f'human summary:\n{summary}')

print(f'model generated output with zero shot prompt:\n{output}\n')LLM text summarization with one shot and few shot inference.

prompt = ''

for index in [20, 40, 60]:

dialogue = dataset['train'][index]['dialogue']

summary = dataset['train'][index]['summary']

prompt += f"""

Dialogue:

{dialogue}

What was going on?

{summary}

"""

dialogue = dataset['train'][0]['dialogue']

prompt += f"""

Dialogue:

{dialogue}

What was going on?

"""

inputs = tokenizer(prompt, return_tensors='pt')

output = tokenizer.decode(

model.generate(

inputs["input_ids"],

max_new_tokens=50,

)[0],

skip_special_tokens=True

)

print(f'human samry:\n{summary}\n')

print(f'model generated output \n{output}')Evaluation Metrics: LLM Text Summarization

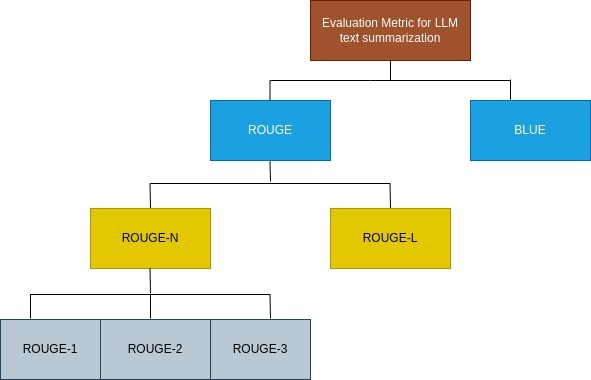

ROUGE

A well-used way of assessing summarization quality using these methods is to compare them against reference summaries; the ROUGE metrics are designed to do this.

These metrics are based around the concept of recall, which is “how much information you’d expect a generated summary to contain (relative to a reference summary)”.

import torch

from rouge import Rouge

gen_summary ="Smith is examined and Dr. Hawkins recommends taking the annual exam. Dr. Hawkins will give some information about his classes and medications to help Mr. Hawkins. Smith quit smoking."

ref_summary = "Dr. Accepted Hawkins Bay. Smith does this every year. Dr. Hawkins will give some information about his classes and medications to help Mr. Hawkins. Smith quit smoking."

rouge = Rouge()

scores = rouge.get_scores(gen_summary, ref_summary)

print(scores)

[{'rouge-1': {'r': 1.0, 'p': 0.8148148148148148, 'f': 0.897959178725531}, 'rouge-2': {'r': 0.9130434782608695, 'p': 0.7241379310344828, 'f': 0.8076923027588757}, 'rouge-l': {'r': 1.0, 'p': 0.8148148148148148, 'f': 0.897959178725531}}]BLEU

Another metric used in the scoring of summaries, is a metric called BLEU scores. Originally developed for assessing machine translation, they measure fluency and adequacy by comparing the automatically generated summary with a high number of reference summaries.

BLEU scores look for “what percentage of n-grams in a synthetic-generated summary matches those in reference summaries?”

from torchtext.data.metrics import bleu_score

gen_summary_tokens = gen_summary.split()

ref_summary_tokens = ref_summary.split()

score = bleu_score([gen_summary_tokens], [[ref_summary_tokens]])

print(f'blue Score: {score*100:.2f}')

blue Score: 70.53Conclusion

LLM text summarization is a technique that shortens long texts, increasing knowledge across various disciplines.

Transformation models and metrics such as ROUGE and BLEU have played a significant role in enhancing text summarization despite the difficulties faced during implementation.

The next step involves coming up with multimodal summaries and giving an account for eco-friendly and clear information control.

FAQs

Whenever we might want to assign a form of summation on the model of in dialogue conversation settings, there is summation in the sense that each short-turn description is dependent on the shape of the conversation as a whole and on this turn.

Advantages of LLM text summaries are that they are used to master human languages such as English, Spanish and the rest of the world languages, they can be used to process text, they can analyze the meaning of the text and they can generate a good coherent output.

The key steps in implementing LLM based text summarization are text preprocessing, fine tuning of the models for summarization tasks and the analysis of generated summaries for their accuracy.

LLM improves text summarization in dialogue conversations by using electronic designs such as, zero-shot, single-shot and single-shot which can transform the content into a better content.